Anthropic on Building Agents

Start with simple prompts, optimize them with comprehensive evaluation, and add multi-step agentic systems only when simpler solutions fall short.

Agentic systems trade latency and cost for better task performance. Use simpler solutions when possible. For single calls consider and in-context examples.

Frameworks simplify low-level tasks and chaining. However, in general it's best to use LLM APIs directly. They create layers of abstraction. Harder to debug and inspect. Tends to encourage complexity. There are cookbooks available.

Choosing between agentic experiences

Agents

- Complex tasks

- operates independently/autonomously: AI directs their own tool use

- Better when flexibility and model-driven decision-making are needed at scale

Workflows

- Prescriptive, pre-defined workflows

- orchestrated through pre-defined code paths

- offer more predictability and performance for well-defined tasks

Building Blocks

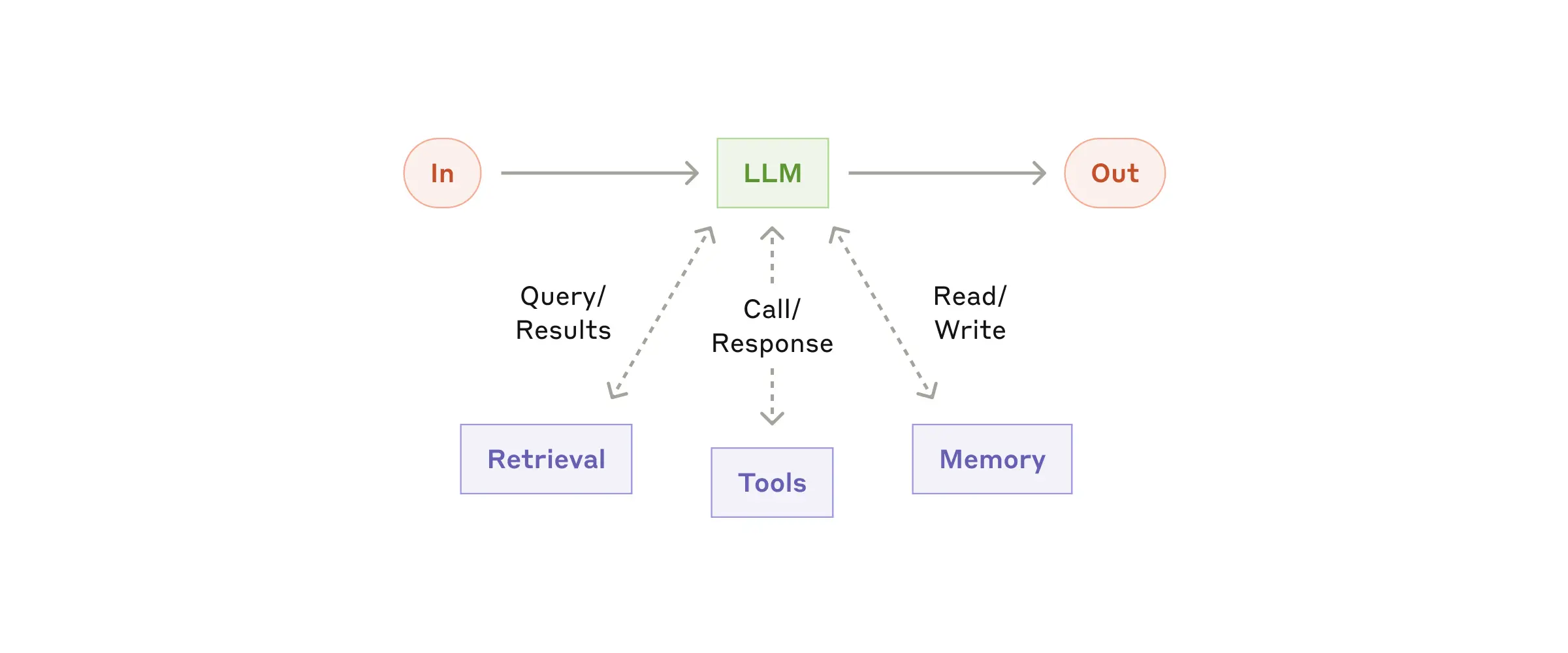

Augmented LLM

System enhanced with retrieval, tools, and memory with capabilities tailored to your needs.

Model Context Protocol is one way to implement the augmentations / enhancements.

Workflows

-

Prompt Chaining

- a task is broken up into steps with each call using the output of the previous.

- useful for higher accuracy and tasks that lend themselves to a subtask workflow

- outline -> criteria check -> execution of task is one such pattern

-

Routing

- the LLM call router selects the preferred followon process (tools, prompts, etc) to use of several choices

- Can be handled by LLM or classification model/algorithm

- can be used for cost / performance optimization

- can be used for experience optimization (e.g. routing the request to the correct entities)

- the LLM call router selects the preferred followon process (tools, prompts, etc) to use of several choices

-

Parallelization

- Partitioning into independent tasks

- for speed (guardrails / response / evals)

- Voting to get diverse outputs for increasing confidence

- using the voting run different checking algorithms check (e.g. vulnerabilities)

- Partitioning into independent tasks

-

Orchestrator-Workers

- an orchestrator breaks down a task, has the workers run them, and then combines the results.

- use for complex tasks where you can't predict the subtasks

- more flexible than parallelization

- good for making changes to multiple entities or retrieving information from multiple sources when searching for relevant matches

-

Evaluator-optimizer

- a call generates the response and then another evaluator call is kicked off as feedback to the generator call

- use when responses are improved when a human articulates their feedback and the LLM can provide that feedback

- e.g. translation -> evaluation -> feedback

Agents

Agents handle complex inputs, reason / plan, use tools and recover from errors through interacting with the environment and user to assess its progress, reliability, and provide human control points.

Essentially they are just LLMs using tools based on environmental feedback in a loop.

Use them for problems where there are unknown steps and no fixed path and there are benefits from scaling in a trusted environment with guardrails.

Agents in Practice & Use Cases

- Customer support

- follow a conversational flow that is well augmented through tool, retrieval, and action usage.

- Coding

- the problem space is well-defined and structured

Prompt Engineering Your Tools

While building our agent for SWE-bench, we actually spent more time optimizing our tools than the overall prompt.

- Tool definitions and specs should be given as much prompt engineering as your overall prompts.

Ensure that tools are easy to use by the model:

- give the model enough tokens to work with

- keep things similar to what's seen textually online

- don't have formatting overhead (string escaping, counting lines, etc)

- ensure names / descriptions are understandable / obvious to the model

- test the model

- change the arguments so it's harder to make mistakes (e.g. relative vs absolute filepaths)

tool definitions should have:

- example usage

- edge cases

- input format requirements

- clear boundaries from other tools